MIC MM OBJECT DETECTION CHALLENGE 2022

MIC Object Detection Challenge

We offer a challenge, where models are to be trained in a data-deficient setting. The challenge is based on the MIC21 dataset. The main objective of the challenge is to detect and classify particular objects that are not usually included in CV tasks: cricketer [621 training instances], soccer player [ 571 training instances], chess player [733 training instances], van [307 training instances], sedan [312 training instances], police car [548 training instances], policeman [687 training instances], soldier [915 training instances], dancer [405 training instances], violinist [410 training instances].

Participants need to provide the output produced by their systems on the test data in the COCO JSON format over the target classes. They can use any of the available frameworks, such as YOLACT and Detectron2, using the COCO dataset as a benchmark dataset.

The MIC 21 training and validation dataset is available here. The test dataset will be released on 1st August 2022.

Using any of the available COCO-based models (which detect objects such as person, car, truck), the participants should provide annotations for objects representing cricketer, soccer player, chess player, van, sedan, police car, policeman, soldier, dancer, violinist. The training and evaluation datasets also contain annotations for objects associated with the objects selected for the task.

The output should be in the COCO JSON format and should contain information about images, categories and annotations (segmentations and bboxes). The evaluation and testing should be provided in the COCO testing framework.

The participants are strongly encouraged to share their code (for example in GitHub) for the purposes of a reproducibility study.

Rules

We allow the use of data other than the provided training data; all training data used, as well as the approach adopted by the participants, should be thoroughly documented in the Technical report.

We require the participants in the MIC MM Challenge to submit a technical report. Submissions of results without an associated technical report do not qualify for the competition.

The Organisers retain the right to disqualify any submissions that violate these rules.

The Organisers retain the right to extend the deadlines.

Technical reports

The technical reports submitted to the MIC MM Challenge should be between 3 and 6 pages long + up to 2 pages for references. The template for the submission is the CLIB 2022 template.

Upon acceptance, the technical reports will be published in the Proceedings of the International Conference Computational Linguistics in Bulgaria (CLIB 2022).

For more information about the dataset, you can check the following publication:

Koeva, S., I. Stoyanova, Y. Kralev. Multilingual Image Corpus – Towards a Multimodal and Multilingual Dataset. In: Proceedings of LREC 2022, pp. 1509-1518. (pdf)

Registration and submission

Preliminary registration for participation in the Challenge (by 30 June 2022), submission of output results (by 15 August 2022) and technical reports (by 20 August 2022) takes place via EasyChair.

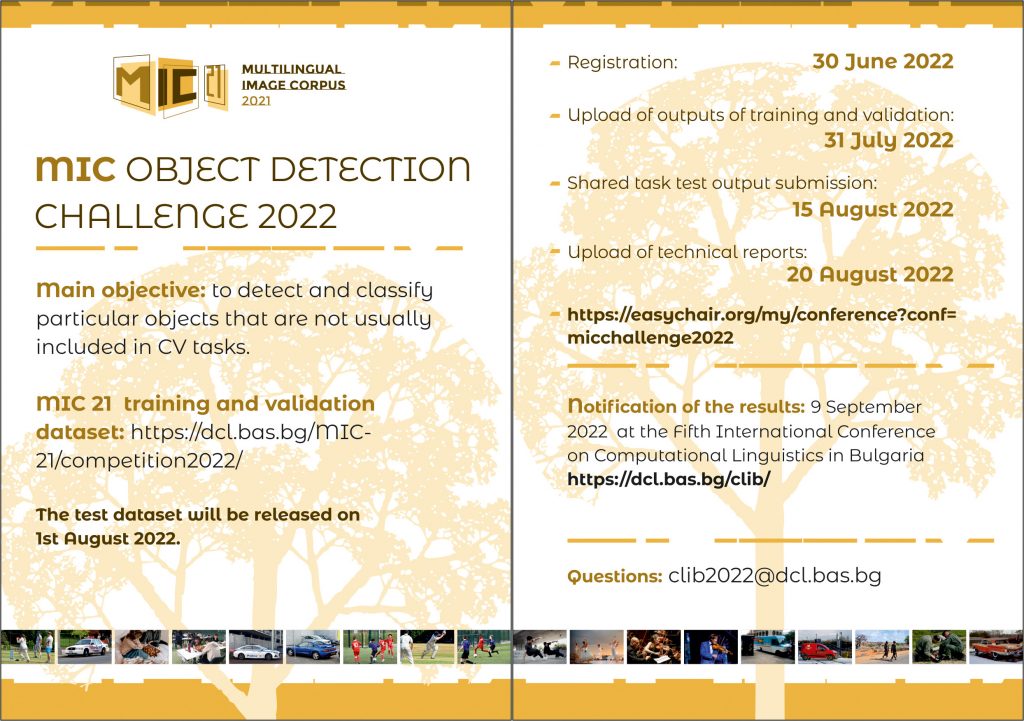

Important dates

Shared task announcement: 1 June 2022

Release of training and validation dataset: 1 June 2022

Registration deadline: 30 June 2022

Upload of outputs of training and validation: 31 July 2022

Release of test dataset: 1 August 2022

Shared task test output submission: 15 August 2022

Upload of technical reports: 20 August 2022

Notification of acceptance: 25 August 2022

Camera-ready of shared task technical reports: 5 September 2022

Notification of the results of the competition: 9 September 2022

Organisers

Svetla Koeva

Jose Manuel Gomez Perez

Yordan Kralev

Ivelina Stoyanova

Svetlozara Leseva

Maria Todorova

If you have any questions, please email the Organisers at clib2022@dcl.bas.bg.